Streaming platform Paramount+ outdid itself this NFL season, breaking ratings records for the AFC Championship game and now the Super Bowl — which was named the “most-watched telecast in history” with 123.4 million average viewers. More than 200 million have joined the game at some point, roughly ⅔ of the population of the United States. It also set a new benchmark as the most televised Super Bowl ever.

In anticipation of this level of interest, Paramount+ completed its migration to a multi-region architecture in early 2023.

Since then, the streaming platform runs in multiple regions in Google Cloud and runs on a distributed SQL database that functions in multiple remote locations. Before that, the database tier was the biggest architectural challenge, which led them to begin their quest for a multi-master distributed database:

“Paramount+ was hosted on a single main (also known as read/write) database. A single vertically scaled main database can only take us forward. As the team considered sharing data and spreading it out, our past experience taught us that it would to be a laborious process. We started looking for a new multi-master capable database with criteria we had to ensure we stuck to a relational database due to the existing nature of the application. This narrowed down the criteria and after some internal research and POCs we narrowed it down to a new player in the database space called YugabyteDB.”

– Quote from a member of the Paramount+ team

So how can you achieve this level of application scalability and high availability across multiple regions? In this blog, I will use an example application to analyze how services like Paramount+ can scale in multi-region settings.

A key component to achieving a true multi-regional architecture

Scaling the application layer across multiple regions is usually a simple matter. Simply select the most appropriate cloud regions, deploy application instances there, and use a global load balancer to automatically route and load balance user requests.

Things get more complicated when dealing with database deployments across multiple regions, especially for transactional applications that require low latency and data inconsistency.

It is possible to achieve global data consistency by introducing a database instance with a single primary that handles all user read and write requests.

However, this approach means that only users near the database cloud region (up in the eastern US) will experience low latency for read and write requests.

Users further away from the database cloud region will face higher latency as their requests travel longer distances. In addition, an outage of the server, data center, or region where the database resides may render the application unavailable.

So getting the database right is crucial when designing a service or application for multiple regions.

Now let’s experiment using YugabyteDB, Paramount+’s distributed database used for the Super Bowl and their global streaming platform.

Two sample YugabyteDB designs for multi-region applications

YugabyteDB is a distributed SQL database built on PostgreSQL, which essentially acts as a distributed version of PostgreSQL. Typically, a database is deployed in a multi-node configuration that spans several servers, availability zones, data centers, or regions.

The Yugabyte database splits data across all nodes and then distributes the load so that all nodes handle read and write requests. Transaction consistency is ensured by the Raft consensus protocol, which replicates changes synchronously among cluster nodes.

In multi-region database deployments, latency between regions has the greatest impact on application performance. While there is no one-size-fits-all solution for multi-regional deployments (with YugabyteDB or any other distributed transactional database), you can choose from several design patterns for global applications and configure your database to work best for your application workloads.

YugabyteDB offers eight commonly used design patterns to balance read and write latency with two key aspects of highly available systems: recovery time objective (RTO) and recovery point objective (RPO).

Let’s now review two sample designs from our list of eight—global database and follower reads—looking at the latency of our sample multi-region application.

Design Pattern #1: Global Database

The global database design pattern assumes that the database is distributed across multiple (ie, three or more) regions or zones. If a failure occurs in one zone/region, nodes in other regions/zones will detect the outage within seconds (RTO) and continue serving application workloads without data loss (RPO=0).

With YugabyteDB you can reduce the number of requests between regions by defining the desired region. All shards/raft leaders will be located in the desired region, providing low latency reads for users near the region and predictable latency for those further away.

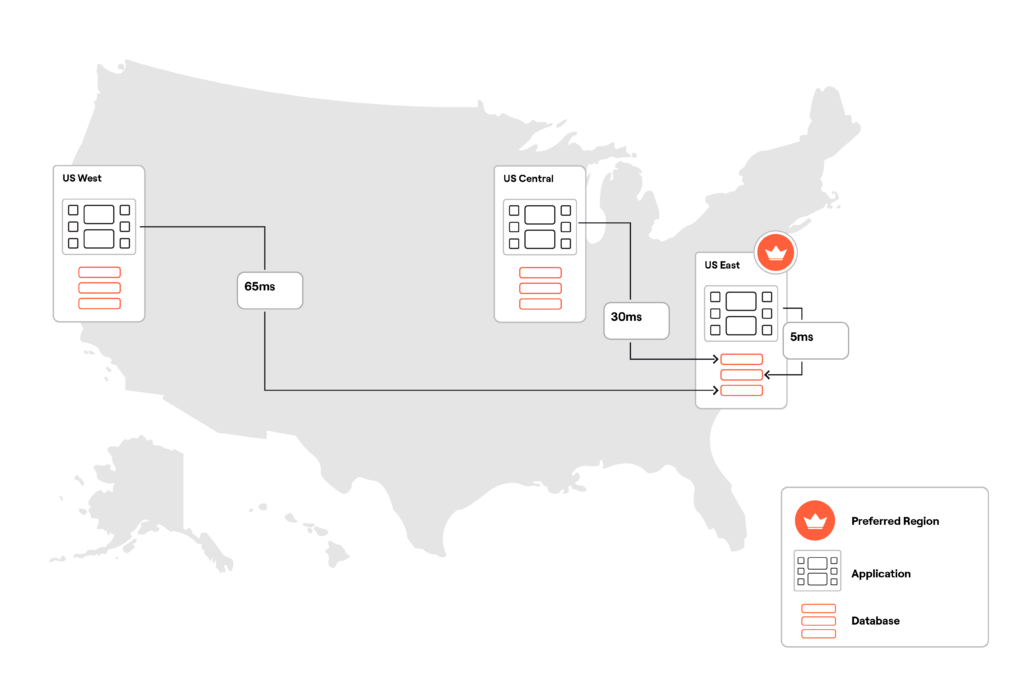

I provisioned a three-node YugabyteDB cluster (below) in the US East, Central, and West regions, with the US East region configured as the preferred region. Each region hosts an application instance that is associated with a node in the preferred region (US East).

In this configuration, the round-trip latency between the application instance and the database varies depending on the distance from the desired region. For example, an application instance from US East is 5 ms away from the desired region, while an instance from US West is 65 ms away. The application instances are US West and Central not directly connected to database nodes in their local regions, as these nodes will continue to automatically route all requests to leaders in the preferred region.

In this configuration, the round-trip latency between the application instance and the database varies depending on the distance from the desired region. For example, an application instance from US East is 5 ms away from the desired region, while an instance from US West is 65 ms away. The application instances are US West and Central not directly connected to database nodes in their local regions, as these nodes will continue to automatically route all requests to leaders in the preferred region.

Our example application is a movie recommendation service that receives user questions in plain English and uses a generative set of AI (OpenAI, Spring AI, and the PostgreSQL pgvector extension) to provide users with relevant movie recommendations.

Let’s say you’re in the mood for a space adventure movie with an unexpected ending. Connect to the movie recommendation service and send the following API request:

http GET app_instance_address:80/api/movie/search \

prompt=='a movie about a space adventure with an unexpected ending' \

rank==7 \

X-Api-Key:superbowl-2024The application performs a vector similarity search by comparing the embedding generated for encourage parameter for embedding previews of movies stored in the database. It then identifies the most relevant movies and sends back the following response (below) in JSON format:

"movies": [

"id": 157336,

"overview": "Interstellar chronicles the adventures of a group of explorers who make use of a newly discovered wormhole to surpass the limitations on human space travel and conquer the vast distances involved in an interstellar voyage.",

"releaseDate": "2014-11-05",

"title": "Interstellar",

"voteAverage": 8.1

,

"id": 49047,

"overview": "Dr. Ryan Stone, a brilliant medical engineer on her first Shuttle mission, with veteran astronaut Matt Kowalsky in command of his last flight before retiring. But on a seemingly routine spacewalk, disaster strikes. The Shuttle is destroyed, leaving Stone and Kowalsky completely alone-tethered to nothing but each other and spiraling out into the blackness of space. The deafening silence tells them they have lost any link to Earth and any chance for rescue. As fear turns to panic, every gulp of air eats away at what little oxygen is left. But the only way home may be to go further out into the terrifying expanse of space.",

"releaseDate": "2013-09-27",

"title": "Gravity",

"voteAverage": 7.3

,

"id": 13475,

"overview": "The fate of the galaxy rests in the hands of bitter rivals. One, James Kirk, is a delinquent, thrill-seeking Iowa farm boy. The other, Spock, a Vulcan, was raised in a logic-based society that rejects all emotion. As fiery instinct clashes with calm reason, their unlikely but powerful partnership is the only thing capable of leading their crew through unimaginable danger, boldly going where no one has gone before. The human adventure has begun again.",

"releaseDate": "2009-05-06",

"title": "Star Trek",

"voteAverage": 7.4

],

"status": /i>

"code": 200,

"success": true

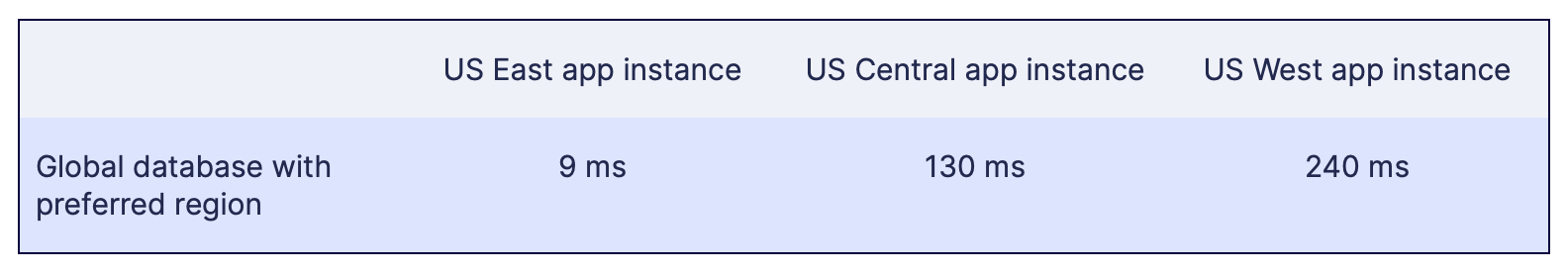

The application response speed and latency of reading this API call depends on which application instance received and processed your request:

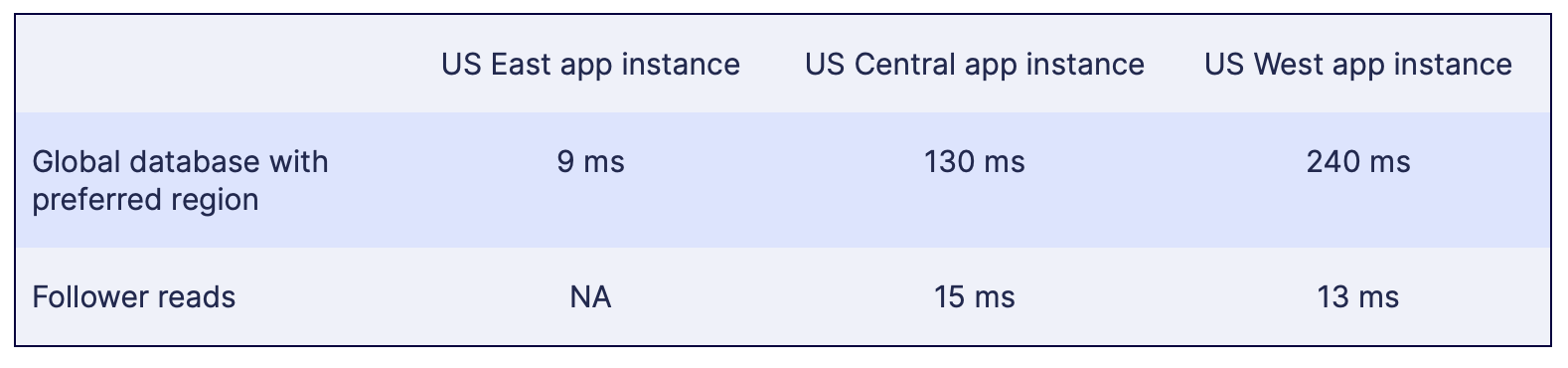

If the request originates from US East, the latency can be as high as 9ms because the master databases are only a few milliseconds away from the application instance based in US East. However, the latency is much higher for application instances in the central and western US. This is because they have to perform a vector similarity search on the leading Eastern US databases and then receive/process a large set of results with detailed information about the proposed films.

Note: The above numbers are not intended as the basis of performance benchmarks. I ran a simple experiment on commodity VMs with several shared vCPUs and did not perform any optimization for the software stack components. The results were just a quick functional test of this multi-regional implementation.

Now, what if you want the app to generate low-latency movie recommendations regardless of the user’s location? How can you achieve low latency reads in all regions? YugabyteDB supports several design patterns that can achieve this, including read followers.

Design Pattern #2: Follower reads

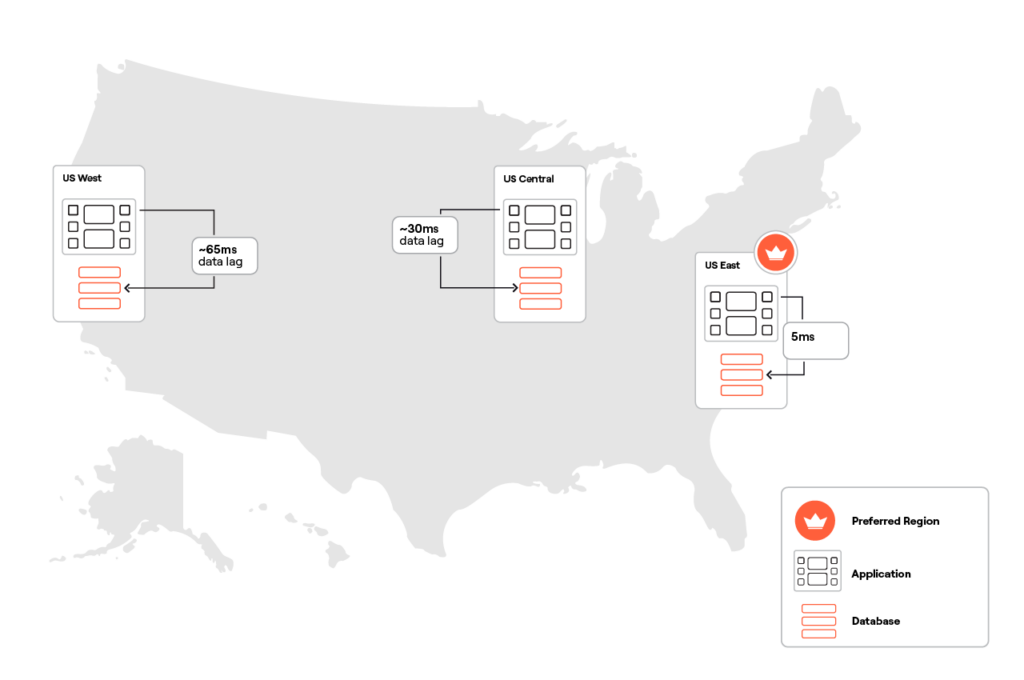

The follower read pattern allows application instances in secondary regions to read from local nodes/followers instead of going to the master databases in the preferred region. This pattern accelerates reads to match those of the leader, even though followers may not have the latest data at the time of the request.

To use this form, I had to:

- Connect application instances from US Central and West to database nodes from their regions.

- Allow followers to be read by setting the following tags for the database session.

SET session characteristics as transaction read only;

SET yb_read_from_followers = true;

With this configuration, read latency is similar across regions. There may be a 30ms data latency on the Central US database node and a 65ms data latency on the Western US node. Why? My multi-region cluster is configured with a replication factor of 3. This means that a transaction will be considered committed after two of the three nodes have committed the changes. So if the US East and Central nodes have confirmed the transaction, the US West node may still be recording the change, explaining the delay while reading the follower.

Despite potential data delays, the entire follower data set constantly remains in a consistent state (across all tables and other database objects). YugabyteDB ensures data consistency through its transactional subsystem and the Raft consensus protocol, which synchronously replicates changes across a multi-regional cluster.

Now let’s use read followers to send the same HTTP request to the US Central and US West instances:

http GET app_instance_address:80/api/movie/search \

prompt=='a movie about a space adventure with an unexpected ending' \

rank==7 \

X-Api-Key:superbowl-2024Now read latency across all regions is consistently low and comparable:

Note: The US East application instance does not need to use the follower read pattern as long as it can work directly with leaders from the desired region.

A quick note about writing to multiple regions

So far, we have used a global database with preferred region and follower read design patterns to ensure low read latency at remote locations. This configuration can tolerate outages at the region level with RTO measured in seconds and RPO=0 (no data loss).

There is a write latency trade-off in this configuration. If YugabyteDB must keep a consistent copy of data across all regions, inter-region latency will affect the time it takes for the Raft consensus protocol to synchronize changes across all locations.

For example, suppose you want to watch the movie “Interstellar.” You added it to your watchlist with the following API call to the movie recommendation service (Note: 157336 is Interstellar’s internal ID):

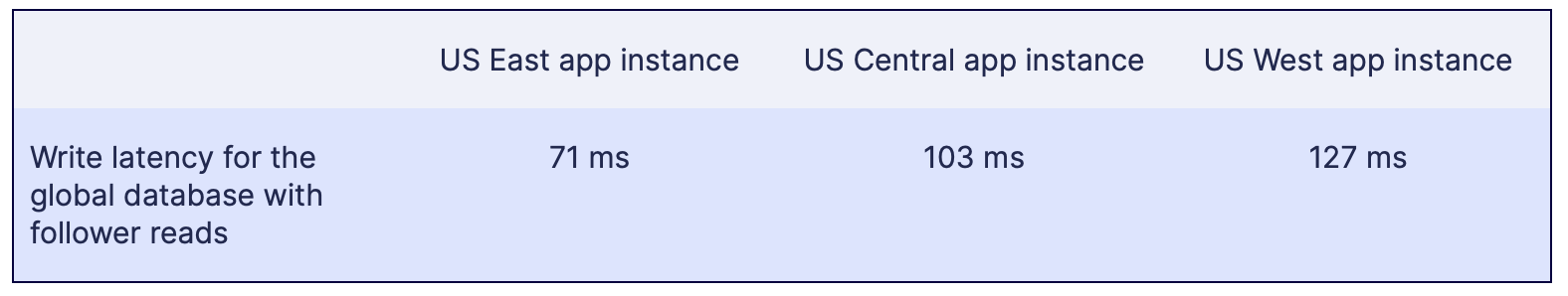

http PUT app_instance_address:80/api/library/add/157336 X-Api-Key:superbowl-2024Latency in my app settings is:

Write latency was lowest for requests originating from an application instance based in US East directly connected to a database node in the preferred region (US East). Latency for writes from other locations was higher because their requests had to travel to the leaders in the desired region before the transaction could be committed and replicated across the cluster.

Does this mean that write latency is always high in a multi-region configuration? Not necessary.

YugabyteDB offers several design patterns that allow you to achieve low latency reads and writes in a multi-regional environment. One such pattern is latency-optimized geopartitioning, where user data is pinned to locations closest to the users, resulting in single-digit millisecond latency for reads and writes.

Video

Abstract

Paramount+’s successful transition to a multi-region architecture shows that with the right design patterns, you can build applications that tolerate region-level outages, scale, and run with low latencies in remote locations.

The Paramount+ technical team has learned the art of scaling by creating a streaming platform that accommodates millions of users during peak periods, with low latency and uninterrupted service. Correct implementation of multi-region settings is crucial. If you choose the right design pattern, you too can build multi-region applications that scale and tolerate all kinds of possible outages.