In an ever-expanding digital landscape, where data is generated at an unprecedented rate, database architecture stands as the foundation of effective data management. With the rise of big data and cloud technologies, along with the integration of artificial intelligence (AI), the field of database architecture has undergone a profound transformation.

This article delves into the intricate world of database architectures, exploring their adaptation to Big Data and Cloud environments, while also dissecting the evolving impact of artificial intelligence on their structure and functionality. As organizations grapple with the challenges of handling massive amounts of data in real time, the importance of robust database architectures is becoming increasingly apparent. From the traditional foundations of relational database management systems (RDBMS) to the flexible solutions offered by NoSQL databases and the scalability of cloud-based architectures, evolution continues to meet the demands of today’s data-driven landscape.

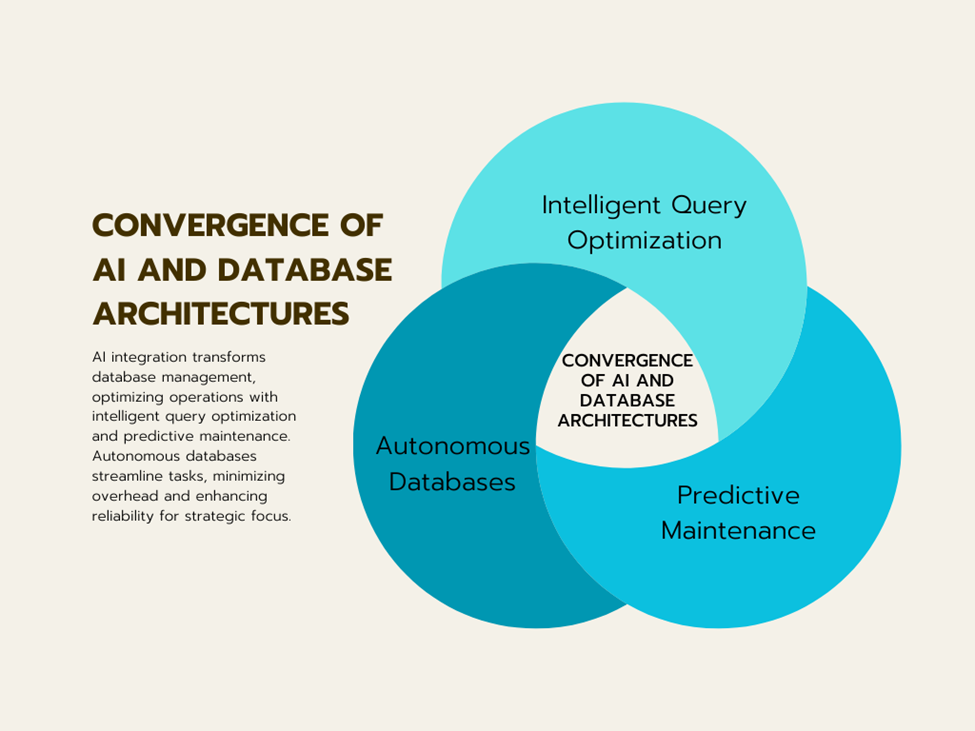

Furthermore, the convergence of AI technologies introduces new dimensions to database management, enabling intelligent query optimization, predictive maintenance and the emergence of autonomous databases. Understanding these dynamics is critical to navigating the complexities of modern data ecosystems and harnessing the full potential of data-driven insights.

Traditional foundation: Relational database management systems (RDBMS)

Traditionally, relational database management systems (RDBMS) have been the leaders in data management. Characterized by structured data organized into tables with predefined schemas, RDBMS ensures data integrity and transaction reliability through ACID (Atomicity, Consistency, Isolation, Durability) properties. Examples of RDBMS include MySQL, Oracle, and PostgreSQL.

Embracing Big Data Complexity: NoSQL Databases

The emergence of Big Data required a shift from rigid RDBMS structures to more flexible solutions capable of handling huge amounts of unstructured or semi-structured data. Enter NoSQL databases, a family of database systems designed to meet the speed, volume, and variety of Big Data (Kaushik Kumar Patel (2024)). NoSQL databases come in a variety of forms, including document-oriented, key-value stores, column family stores, and graph databases, each optimized for specific data models and use cases. Examples include MongoDB, Cassandra, and Apache HBase.

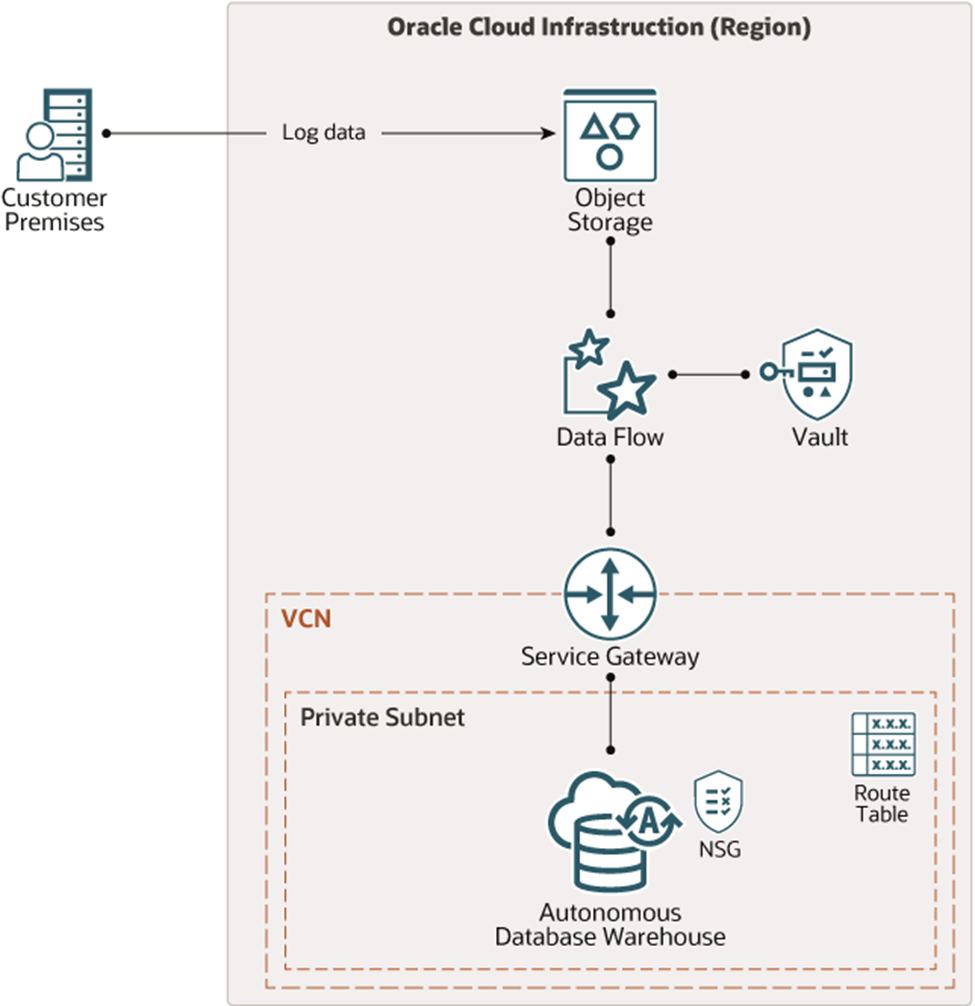

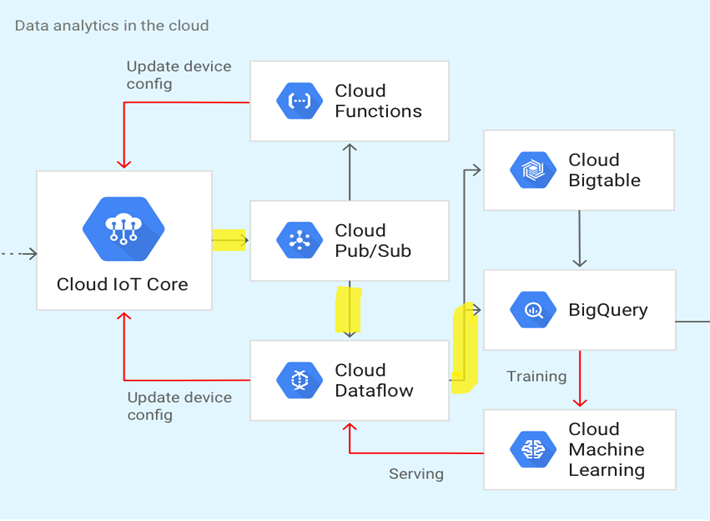

Harnessing the power of the cloud: cloud-based database architectures

Cloud-based database architectures take advantage of the scalability, flexibility, and cost-effectiveness of cloud infrastructure to provide on-demand access to data storage and processing resources. Through models such as Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Database as a Service (DBaaS), organizations can choose the level of abstraction and management that suits their needs. Multi-cloud and hybrid cloud architectures further increase flexibility by enabling workload distribution across multiple cloud providers or integration with on-premises infrastructure (Hichem Moulahoum, Faezeh Ghorbanizamani (2024)). Notable examples include Amazon Aurora, Google Cloud Spanner, and Microsoft Azure Cosmos DB.

Data flow and storage: on-premises versus cloud databases

Understanding data flow and storage is critical to effectively managing on-premises and cloud databases. Here’s a breakdown with a database architect (DBA) diagram for each scenario:

Local database

Explanation

- Application server: This interacts with the database, triggering the creation, retrieval and updating of data.

- Data extraction: This process, often using extract, transform, load (ETL) or extract, load, transform (ELT) methodologies, extracts data from various sources, converts it into a database-compatible format, and loads it.

- Data base: This is the fundamental location for storing, managing and organizing data using specific structures such as relational tables or NoSQL document stores.

- Storage: This represents the physical storage devices such as hard disk drives (HDD) or solid-state drives (SSD) that hold the database files.

- Security system: Regular backup is essential for disaster recovery and ensuring data availability.

Data flow

- Applications interact with the database server, sending requests to create, retrieve and update data.

- The ETL/ELT process extracts data from various sources, transforms it and loads it into a database.

- Data is stored within the database mechanism, organized according to its specific structure.

- Storage devices physically hold database files.

- Backups are periodically created and stored separately for data recovery purposes

Cloud database

Explanation

- Application server: Like the on-premises scenario, this interacts with the database, but through an API gateway or SDK provided by the cloud provider.

- API Gateway/SDK: This layer acts as an abstraction, hiding the complexity of the underlying infrastructure and providing a standardized way for applications to interact with the cloud database.

- Cloud database: This is a managed service offered by cloud service providers that automatically manages database creation, maintenance, and scaling.

- Cloud storage: This represents the cloud provider’s storage infrastructure, where database files and backups are stored.

Data flow

- Applications communicate with the cloud database through an API gateway or SDK, sending requests for data.

- The API Gateway/SDK translates the requests and communicates with the cloud database service.

- A cloud database service manages data persistence, organization, and retrieval.

- The data is stored within the cloud service provider’s storage infrastructure.

Key differences

- Management: On-premise databases require in-house expertise for setup, configuration, maintenance and backups. Cloud databases are managed services, with the provider managing these aspects, freeing up IT resources.

- Scalability: On-premises databases require manual scaling of hardware resources, while cloud databases offer elastic scaling, automatically adapting to changing needs.

- Security: Both options require security measures such as access control and encryption. However, cloud providers often have robust security infrastructure and compliance certifications.

The convergence of AI and database architecture

The integration of artificial intelligence (AI) into database architectures heralds a new era of intelligent data management solutions. Artificial intelligence technologies such as machine learning and natural language processing augment database functionality by enabling automated data analysis, prediction and decision making. These improvements not only simplify operations, but also open new avenues for optimizing database performance and reliability.

Intelligent query optimization

In the field of intelligent query optimization, AI-powered techniques are revolutionizing the way databases handle complex queries. By analyzing workload patterns and system resources in real-time, AI algorithms dynamically adjust query execution plans to improve efficiency and reduce latency. This proactive approach ensures optimal performance, even in the face of fluctuating workloads and evolving data structures.

Predictive maintenance

Predictive maintenance, powered by artificial intelligence, is changing the way organizations manage database health and stability. Using historical data and predictive analytics, AI algorithms predict potential system failures or performance bottlenecks before they occur. This prediction enables proactive maintenance strategies, such as resource allocation and system upgrades, mitigating downtime and optimizing database reliability.

Autonomous databases

Autonomous databases represent the pinnacle of innovation in AI-driven database architectures. These systems use AI algorithms to automate routine tasks, including performance tuning, security management, and data backups. By autonomously optimizing database configuration and resolving security vulnerabilities in real-time, autonomous databases reduce operational costs and increase system reliability. This newfound autonomy allows organizations to focus on strategic initiatives instead of routine maintenance tasks, driving innovation and efficiency across the enterprise.

Looking to the future: Trends and challenges

As the trajectory of database architecture evolves, a spectrum of trends and challenges attract our attention:

Edge computing

The proliferation of Internet of Things (IoT) devices and the rise of edge computing architectures herald a shift toward decentralized data processing. This requires the development of a distributed database solution that can efficiently manage and analyze data at the edge of the network, optimize latency and bandwidth utilization while providing real-time insights and responsiveness.

Data privacy and security

In the era of increasing amount of data, the preservation of privacy and data security assumes the highest importance (Jonny Bairstow, (2024)). As regulatory frameworks tighten and cyber threats escalate, organizations must navigate a complex data management landscape to ensure compliance with strict regulations and strengthen defenses against new security vulnerabilities, protecting sensitive information from breaches and unauthorized access.

Federated Data Management

The proliferation of different data sources across different systems and platforms highlights the need for federated data management solutions. Federated database architectures offer a cohesive framework for seamless integration and access to distributed data sources, facilitating interoperability and enabling organizations to leverage the full spectrum of their data assets for informed decision making and actionable insights.

Quantum databases

The advent of quantum computing heralds paradigm shifts in database architectures, promising exponential leaps in computational power and algorithmic efficiency. Quantum databases, which use the principles of quantum mechanics, have the potential to revolutionize data processing by enabling faster computations and more sophisticated analytics for complex data sets. As quantum computing matures, organizations must prepare to embrace these transformative opportunities, using quantum databases to unlock new frontiers in data-driven innovation and discovery.

Conclusion

The evolution of database architecture reflects the relentless march of technological progress. From the rigid structures of traditional RDBMS to the flexibility of NoSQL databases and the scalability of cloud-based solutions, databases have adapted to meet the growing needs of data-intensive applications. Moreover, the integration of artificial intelligence increases the functionality of the database, paving the way for more intelligent and automated data management solutions. As we move into the future, meeting new challenges and embracing innovative technologies will be key in shaping the next generation of database architectures.

References

- Kaushikkumar Patel (2024), Mastering Cloud Scalability: Strategies, Challenges, and Future Directions: Navigating Complexities of Scaling in the Digital Era

- Hichem Moulahoum, Faezeh Ghorbanizamani (2024), Managing the development of silver nanoparticle-based food analysis through the power of artificial intelligence

- D. Dhinakaran, SM Udhaya Sankar, D. Selvaraj, S. Edwin Raja (2024), Privacy Preserving Data in IoT-Based Cloud Systems: A Comprehensive Survey with Artificial Intelligence Integration

- Mihaly Varadi, Damian Bertoni, Paulyna Magana, Urmila Paramval, Ivanna Pidruchna, (2024), The AlphaFold Protein Structure Database in 2024: providing structural coverage for more than 214 million protein sequences

- Jonny Bairstow, (2024), “Navigating the Confluence: Big Data Analytics and Artificial Intelligence – Innovations, Challenges and Future Directions”