Artificial intelligence is quickly becoming part of our mobile experience, with Google and Samsung leading the way. Apple, however, is also making significant strides in AI within its ecosystem. Recently, the Cupertino tech giant unveiled a project known as MM1, a multimodal large language model (MLLM) that can process both text and images. Now a new study has been published that reveals a new MLLM designed to understand the nuances of mobile screen interfaces. Paper, which was published by Cornell University and pointed out Apple Insiderpresents “Ferret-UI: Grounded Mobile User Interface Understanding with Multimodal LLM.”

Ferret-UI is a new MLLM adapted for improved screen understanding of mobile user interfaces, equipped with referencing, grounding and inference capabilities.

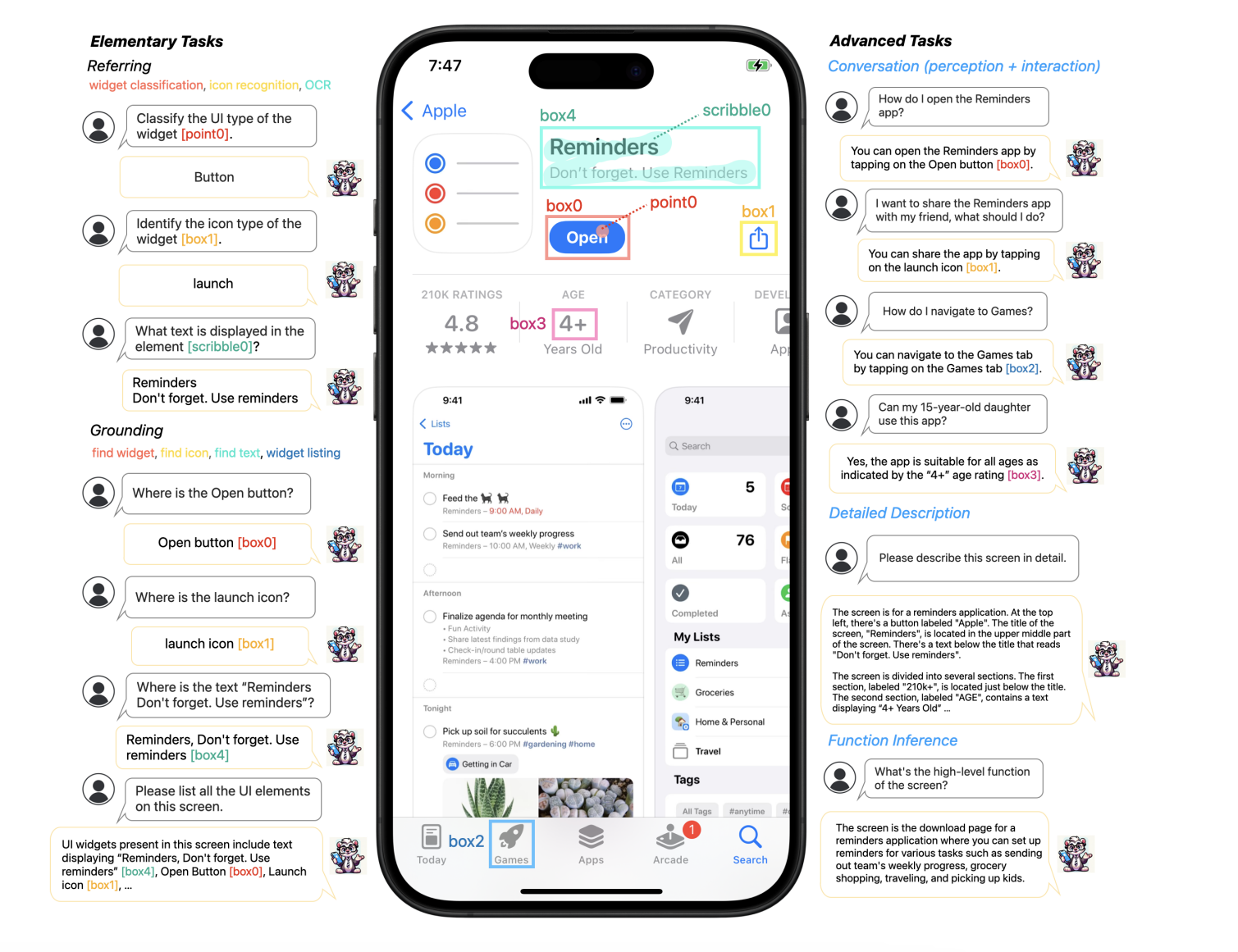

Ferret-UI in action, analyzing the iPhone screen (image author – Apple)

To solve this, Ferret-UI introduces a zoom feature that improves the readability of screen elements by scaling the image to the desired resolution. This capability is a game-changer for how AI interacts with mobile interfaces.

According to the paper, Ferret-UI excels at recognizing and categorizing widgets, icons and text on mobile screens. It supports different input methods like pointing, boxing or scribbling. By performing these tasks, the model gains good insight into visual and spatial data, which helps it accurately distinguish between different elements of the user interface.

What sets Ferret-UI apart is its ability to work directly with raw display pixel data, eliminating the need for external detection tools or display rendering files. This approach significantly improves single-screen interactions and opens up possibilities for new applications, such as improving device accessibility. The research paper highlights Ferret-UI’s expertise in performing tasks related to identification, location and reasoning. This finding suggests that advanced AI models like Ferret-UI could revolutionize UI interaction, offering more intuitive and efficient user experiences.

What if Ferret-UI integrates with Siri?

While it hasn’t been confirmed whether Ferret-UI will be integrated into Siri or other Apple services, the potential benefits are intriguing. Ferret-UI, by improving the understanding of mobile user interfaces through a multimodal approach, could significantly improve voice assistants like Siri in several ways.

This could mean that Siri is getting better at understanding what users want to do within apps, perhaps even solving more complicated tasks. Additionally, it could help Siri better understand the context of a query by taking into account what’s on the screen. Ultimately, this could make using Siri a smoother experience, letting her handle actions like navigating through apps or understanding what’s going on visually.