Apple researchers have published a paper on arXiv, a repository of peer-reviewed papers, on the development of ‘ Ferret-UI ,’ a multimodal large language model (MLLM) designed to understand smartphone application user interfaces.

[2404.05719] Ferret-UI: Grounded Mobile User Interface Understanding with Multimodal LLM

https://arxiv.org/abs/2404.05719

Apple teaches AI system to use apps; maybe for advanced Siri

https://9to5mac.com/2024/04/09/ferret-ui-advanced-siri/

Large-scale language models (LLMs), which are the basis of chatbot AI systems such as ChatGPT, learn from huge amounts of text, mostly collected from web pages, while MLLMs such as Google Gemini learn not only from text, but also from non-textual information such as images, videos and audio.

However, MLLM is not considered to perform well as a smartphone app. One reason for this is that most images and videos used for training have a landscape aspect ratio, which is different from that of smartphone screens. Another problem is that on smartphones, the user interface objects that need to be recognized, such as icons and buttons, are smaller than natural image objects.

Announced by Apple researchers, Ferret-UI is a generative AI system designed to recognize mobile app screens on smartphones.

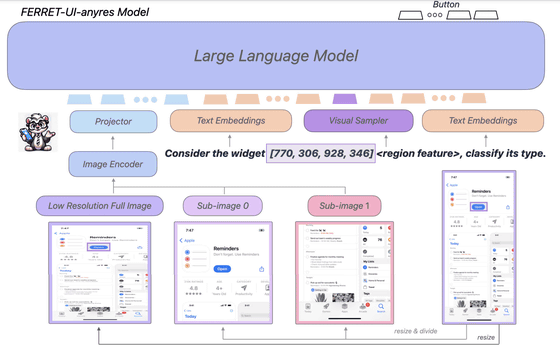

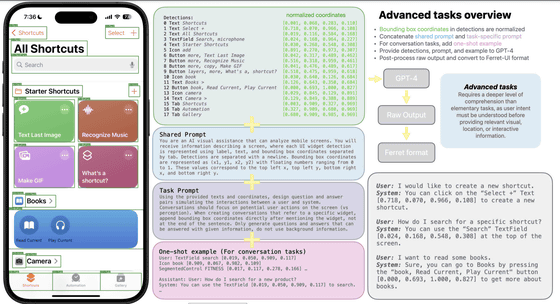

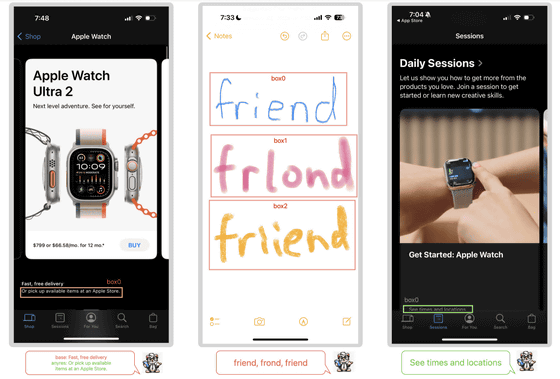

Smartphone user interface screens typically have a long aspect ratio and contain small objects such as icons and text. To address this, Ferret-UI introduces a technique called ‘any resolution’ that increases image detail and uses enhanced visual features, allowing Ferret-UI to accurately recognize UI details regardless of screen resolution.

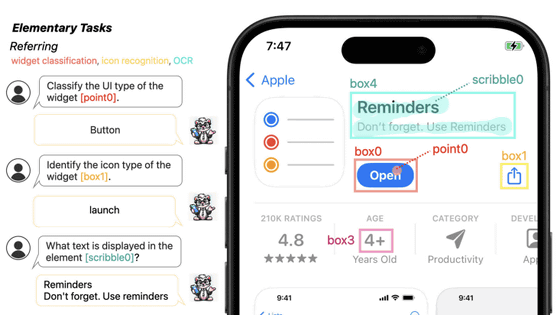

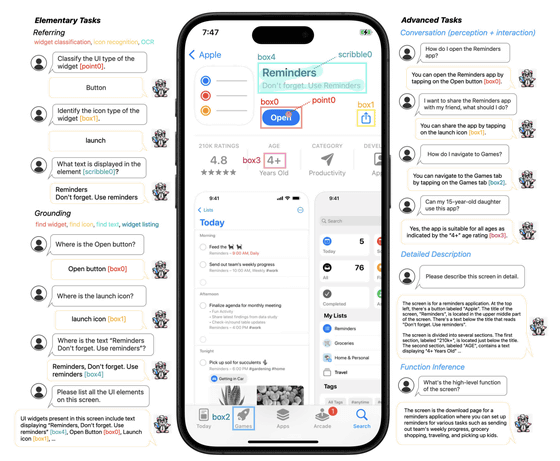

Ferret-UI also carefully collects a wide range of training examples for basic UI tasks, such as icon recognition, text search, and widget listing. These examples are labeled domain-specifically, making it easy to associate language with images and find them accurately. In other words, Ferret-UI can correctly understand a wide variety of user interfaces by learning a large number of concrete user interface examples.

According to the paper, Ferret-UI performs better

GPT-4V and other existing UI-aware MLLMs. This suggests that Ferret-UI’s ‘any resolution’ technique, large and diverse training data, and support for advanced tasks are very effective in understanding and operating user interfaces.

To further improve the inference capabilities of Ferret-UI, more datasets are being compiled for advanced tasks, such as detailed descriptions, perception/interaction conversations, and feature inference, which will allow Ferret-UI to go beyond simple UI recognition to more complex and understanding and interaction of the abstract user interface.

If Ferret-UI is put into practical use, it is expected to improve accessibility. Even people who cannot see the smartphone screen due to visual impairment will be able to summarize what is shown on the screen and convey it to the user using AI. In addition, when developing smartphone applications, having Ferret-UI screen recognition can allow for faster verification of the clarity and ease of use of the application’s user interface.

Furthermore, since it is a multimodal AI optimized for smartphones, it can be combined with Siri, the AI assistant built into the iPhone, to automate more advanced tasks using any app.