In the world of machine learning, there was a limit to models — they could only process one type of data at a time. However, the ultimate aspiration of machine learning is to rival the cognitive power of the human mind, which effortlessly comprehends different modalities of data simultaneously. Recent breakthroughs, demonstrated by models like the GPT-4V, have now demonstrated the remarkable ability to handle multiple data modalities simultaneously. This opens up exciting opportunities for developers to create AI applications capable of seamlessly managing different types of data, known as multimodal applications.

One compelling use case that has gained immense popularity is multimodal image search. It allows users to find similar images by analyzing features or visual content. Thanks to rapid advances in computer vision and deep learning, image search has become incredibly powerful.

In this article, we will create a multimodal image search application using models from the Hugging Face library. Before we get into the practical implementation, let’s go through some basics to set the stage for our exploration.

What is a multimodal system?

A multimodal system refers to any system that can use more than one mode of interaction or communication. This means a system that can process and understand different types of input at the same time, such as text, images, voice, and sometimes even touch or gestures, and can also return results in different ways.

For example, GPT-4V(opens a new window, developed by OpenAI, is an advanced multimodal model that can handle multiple “modalities” of text and image input at the same time. When given an image accompanied by a descriptive query, the model can analyze the visual content based on the specified text.

What are multimodal embeddings?

Multimodal embedding, an advanced machine learning technique, is the process of generating a numerical representation of multiple modalities, such as images, text, and sound, in a vector format. Unlike basic embedding techniques, which represent only one type of data in a vector space, multimodal embedding can represent different types of data within a unified vector space. This enables, for example, the correlation of a textual description with the corresponding image. With the help of multimodal embeddings, the system could analyze the image and associate it with relevant textual descriptions or vice versa.

Now let’s discuss how to develop this project and the technologies we will use.

We will use CLIP(opens a new window, MyScale (opens a new window)and the Unsplash-25k dataset (opens a new window)in this project. Let’s look at them in detail.

- PISTON: You will use a pre-trained multimodal CLIP (opens a new window)developed by OpenAI from Hugging Face. This model will be used to integrate text and images.

- MyScale: MyScale is a SQL vector database used to store and process structured and unstructured data in an optimized way. You will use MyScale to store vector inserts and query relevant images.

- Unsplash-25k dataset: The dataset provided by Unsplash contains about 25 thousand images. Includes some complicated scenes and objects.

How to set up Hugging Face and MyScale

To start using Hugging Face and MyScale in a local environment, you need to install some Python packages. Open a terminal and enter the following pip command:

Download and upload the dataset

The first step is to download the dataset and extract it locally. You can do this by entering the following commands into your terminal.

| photo_id | photo_url | photo url_images |

|---|---|---|

| xapxF7PcOzU | https://unsplash.com/photos/wud-eV6Vpwo | https://images.unsplash.com/photo-143924685475… |

| psIMdj26lgw | https://unsplash.com/photos/psIMdj26lgw | https://images.unsplash.com/photo-144077331099… |

The difference between photo_url and photo_image_url is that photo_url contains the URL to the image description page, telling about the author and other meta information about the photo. The photo_image_url it only contains the URL of the image, and we will use it to download the image.

Load the model and get the embeds

After loading the dataset, let’s first load clip-vit-base-patch32 (opens a new window)model and write a Python function to transform images into vector inserts. This function will use the CLIP model to represent embeddings.

- If you specify both image and text, the code returns a single vector, combining the embeddings of both.

- If you specify text or an image (but not both), the code simply returns an embedding of the specified text or image.

Note: We use the basic way to merge two inserts just to focus on the multimodal concept. But there are some better ways to combine insertions, like chaining and attention mechanisms.

We will load, download and pass the first 1000 images from the dataset to the above create_embeddings function. The returned embeds will then be stored in a new column photo_embed.

After this process, our dataset is complete. The next step is to create a new table and store the data in MyScale.

Connect to MyScale

To connect the app to MyScale, you will need to perform several setup and configuration steps.

Once you have the connection details, you can replace the values in the code below:

Make a table

Once the connection is established, the next step is to create the table. Now let’s first look at our data frame with this command:

| photo_id | photo url_images | photo_embed |

|---|---|---|

| wud-eV6Vpwo | https://images.unsplash.com/uploads/1411949294… | [000287541048601269720027609225362539290[000287541048601269720027609225362539290[000287541048601269720027609225362539290[000287541048601269720027609225362539290 |

| psIMdj26lgw | https://images.unsplash.com/photo-141633941111… | [0019032524898648262-0041982628405094150[0019032524898648262-0041982628405094150[0019032524898648262-0041982628405094150[0019032524898648262-0041982628405094150 |

| 2EDjes2hlZo | https://images.unsplash.com/photo-142014251503… | [-00154126640409231190019234161823987960[-00154126640409231190019234161823987960[-00154126640409231190019234161823987960[-00154126640409231190019234161823987960 |

Let’s create a table depending on the data frame.

Insert data

Let’s insert the data into the newly created table:

Note: The MSTG algorithm was developed by MyScale and is much faster than other indexing algorithms like IVF and HNSW.

How to query MyScale

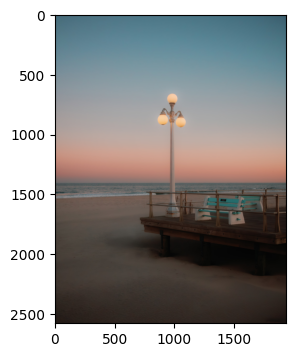

Once the data is loaded, we are ready to use MyScale to query the data and use multimodal to get the images. So first let’s try to get a random image from the table.

How to get relevant images using text and image

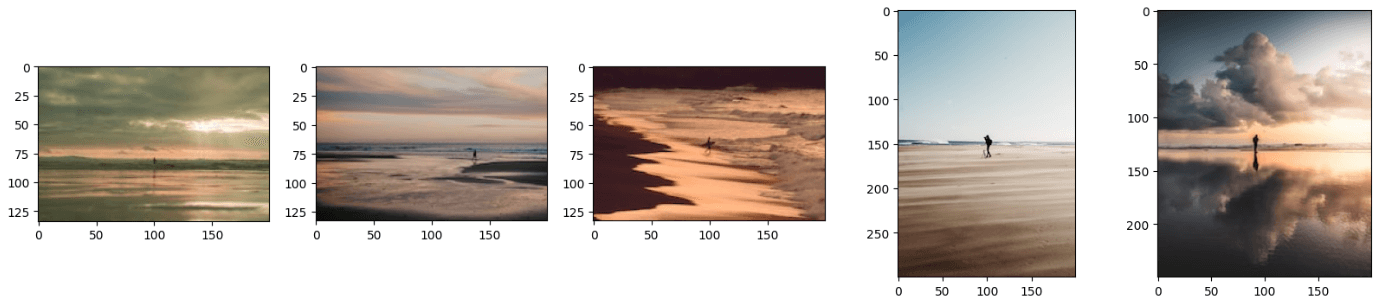

As you have learned, a multimodal model can handle multiple modalities of data at the same time. Similarly, our model can process both images and text simultaneously, providing relevant images. We will submit the following image with the text: ‘A man standing on the beach.’

Let’s pass the URL of the image with the text to create_embeddings function.

The code above will generate results similar to this:

Note: You can further improve the results by using better insertion merge techniques.

You may have noticed that the resulting images look like a combination of text and an image. You can also get results by just adding an image or text to this model and it will work perfectly. For that you simply need to comment any image_url or query_text line of code.

Conclusion

Traditional models are used to obtain vector representations of only one type of data, but the latest models are trained on much more data and can now represent different types of data in a single vector space. We took advantage of the latest model, CLIP, to develop an application that takes both text and images as input and returns relevant images.

The possibilities of multimodal embeddings are not limited to image search applications; rather, you can use this cutting-edge technique to develop cutting-edge recommendation systems, visual Q&A apps where users can ask image-related questions, and more. As you develop these applications, consider using MyScale(opens a new windowan integrated SQL vector database that allows you to store vector inserts and tabular data from your dataset with super fast data retrieval capabilities.