MongoDB is one of the most reliable and powerful document-oriented NoSQL databases. It enables developers to provide feature-rich applications and services with various modern built-in functions, such as machine learning, streaming, full-text search, etc. Although not a classic relational database, MongoDB is still used by a wide range of different business sectors and its use cases cover all kinds of architecture scenarios and data types.

Document-oriented databases are inherently different from traditional relational databases where data is stored in tables, and one entity can be distributed across several such tables. In contrast, document databases store data in separate, unrelated files collections, which eliminates the intrinsic weight of the relational model. However, given that domain models in the real world are never so simple as to consist of unrelated separate entities, document databases (including MongoDB) provide several ways to define multi-collection relationships similar to classical database relationships, but much easier, more cost-effective and more efficient.

Quarkus, the “supersonic and subatomic” Java stack, is the new kid on the block that the hippest and most influential developers are desperately grabbing and fighting over. Its modern cloud-native features, as well as its performance (conforming to the best standard libraries), together with its ability to build native executables have seduced Java developers, architects, engineers and software designers for a couple of years.

We cannot go into further details about MongoDB or Quarkus here: the reader who wants to know more is invited to check the documentation on the official MongoDB website or the Quarkus website. What we are trying to achieve here is to implement a relatively complex use case consisting of CRUDing a customer-order-product domain model using Quarkus and its MongoDB extension. In an attempt to provide a real-world inspired solution, we try to avoid simplistic and cartoonish examples based on a single entity model without connectivity (there are dozens of them today).

So let’s go!

Domain model

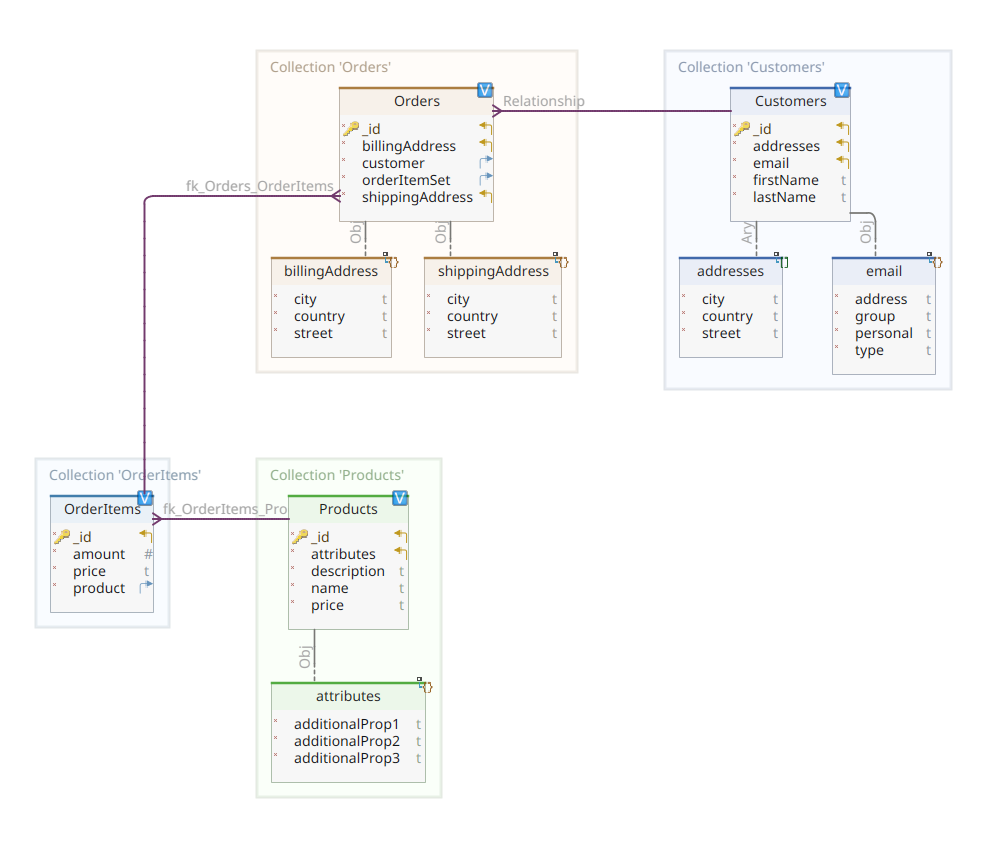

The diagram below shows ours customer-order-product domain model:

As you can see, the central document of the model is Orderstored in a dedicated collection named Orders. An Order is an aggregate of OrderItem documents, each of which points to its associate Product. An Order the document also refers to Customer who posted it. In Java this is implemented as follows:

@MongoEntity(database = "mdb", collection="Customers")

public class Customer

@BsonId

private Long id;

private String firstName, lastName;

private InternetAddress email;

private Set<Address> addresses;

...

The above code shows a fragment Customer class. This is a POJO (Plain Old Java Object) marked with @MongoEntity annotation, which parameters define the name of the database and the name of the collection. The @BsonId annotation is used to configure a unique document identifier. Although the most common use case is to implement a document identifier as an instance ObjectID classes, this would introduce a useless tidal link between MongoDB-specific classes and our document. Other properties are the customer’s first and last name, email address, and set of mailing addresses.

Let’s see now Order document.

@MongoEntity(database = "mdb", collection="Orders")

public class Order

@BsonId

private Long id;

private DBRef customer;

private Address shippingAddress;

private Address billingAddress;

private Set<DBRef> orderItemSet = new HashSet<>()

...

Here we need to create a link between the order and the customer who sent it. We were able to embed an affiliate Customer document in our Order document, but that would be a bad design because it would redundantly define the same object twice. We need to use a reference to the associate Customer document, and we do this using DBRef class. The same thing happens for the set of associated order items where, instead of embedding documents, we use a set of references.

The rest of our domain model is quite similar and based on the same normalization ideas; for example, OrderItem document:

@MongoEntity(database = "mdb", collection="OrderItems")

public class OrderItem

@BsonId

private Long id;

private DBRef product;

private BigDecimal price;

private int amount;

...

We need to associate the product that forms the object of the current order item. Last but not least, we have Product document:

@MongoEntity(database = "mdb", collection="Products")

public class Product

@BsonId

private Long id;

private String name, description;

private BigDecimal price;

private Map<String, String> attributes = new HashMap<>();

...

That’s pretty much it for our domain model. However, there are some additional packages we need to look at: serializers and codecs.

To be able to be exchanged on the wire, all our objects, be they business or purely technical, must be serialized and deserialized. Specially designated components, so-called, are responsible for these operations serializers/deserializers. As we have seen, we use DBRef type to define the association between different collections. Like any other subject, a DBRef the instance should be serializable/deserializable.

The MongoDB driver provides serializers/deserializers for most data types that should be used in most common cases. However, for some reason, it doesn’t provide serializers/deserializers for DBRef type. So we have to implement our own, and that’s what it is serializers the package works. Let’s look at these classes:

public class DBRefSerializer extends StdSerializer<DBRef>

public DBRefSerializer()

this(null);

protected DBRefSerializer(Class<DBRef> dbrefClass)

super(dbrefClass);

@Override

public void serialize(DBRef dbRef, JsonGenerator jsonGenerator, SerializerProvider serializerProvider) throws IOException

if (dbRef != null)

jsonGenerator.writeStartObject();

jsonGenerator.writeStringField("id", (String)dbRef.getId());

jsonGenerator.writeStringField("collectionName", dbRef.getCollectionName());

jsonGenerator.writeStringField("databaseName", dbRef.getDatabaseName());

jsonGenerator.writeEndObject();

This is ours DBRef serializer and, as you can see, it is Jackson’s serializer. That’s because quarkus-mongodb-panache the extension we use here relies on Jackson. Perhaps a future release will use JSON-B, but for now we’re sticking with Jackson. Expands StdSerializer class as usual and serializes its associated DBRef object using a JSON generator, passed as an input argument, to write to the output stream DBRef components; i.e. object ID, collection name and database name. For more information about DBRef structure, see the MongoDB documentation.

The deserializer performs a complementary operation, as shown below:

public class DBRefDeserializer extends StdDeserializer<DBRef>

public DBRefDeserializer()

this(null);

public DBRefDeserializer(Class<DBRef> dbrefClass)

super(dbrefClass);

@Override

public DBRef deserialize(JsonParser jsonParser, DeserializationContext deserializationContext) throws IOException, JacksonException

JsonNode node = jsonParser.getCodec().readTree(jsonParser);

return new DBRef(node.findValue("databaseName").asText(), node.findValue("collectionName").asText(), node.findValue("id").asText());

This is pretty much all there is to say regarding serializers/deserializers. Let’s move on to see what codecs the package brings us.

Java objects are stored in the MongoDB database using the BSON (Binary JSON) format. In order to store information, the MongoDB driver needs the ability to map Java objects to their associated BSON representation. It does so in the name Codec interface, which contains the necessary abstract methods to map Java objects to BSON and vice versa. By implementing this interface, the conversion logic between Java and BSON and vice versa can be defined. The MongoDB driver includes the necessary Codec implementation for the most common types but again, for some reason, when it comes to DBRefthis implementation is just fake, which raises UnsupportedOperationException. After contacting the MongoDB driver implementers, I was unable to find any solution other than implementing my own Codec mapper, as the class indicates DocstoreDBRefCodec. For brevity, we will not reproduce the source code of this class here.

Once our dedicated Codec implemented, we need to register it with the MongoDB driver, so that it uses it when it comes to mapping DBRef types to Java objects and vice versa. To do this, we need to implement an interface CoderProvider which, as the spike shows DocstoreDBRefCodecProviderreturns via its summary get() method, the concrete class responsible for performing the mapping; i.e. in our case DocstoreDBRefCodec. And that’s all we need to do here because Quarkus will automatically detect and use ours CodecProvider custom implementation. Please take a look at these classes to see and understand how things are done.

Data repositories

Quarkus Panache greatly simplifies the data persistence process by supporting i active record and repository design samples. Here, we will use the second one.

Unlike similar persistence sets, Panache relies on entity bytecode enhancements during compilation. It includes a note processor that automatically performs these improvements. All this note processor needs to do its enhancement work is an interface like the one below:

@ApplicationScoped

public class CustomerRepository implements PanacheMongoRepositoryBase<Customer, Long>The code above is all you need to define a complete service that can exist Customer document instances. Your interface needs to be expanded PanacheMongoRepositoryBase one and parameterize it with your object ID type, in our case a Long. The Panache annotation processor will generate all the necessary endpoints needed to perform the most common CRUD operations, including but not limited to save, update, delete, query, paging, sorting, transaction handling, etc. All these details are fully explained here. Another possibility is an extension PanacheMongoRepository instead PanacheMongoRepositoryBase and to use the provided ObjectID keys instead of customizing them as Long, as we did in our example. Whether you choose the 1st or 2nd alternative, this is just a matter of preference.

REST API

To make our persistence service generated by Panache effective, we need to expose it via a REST API. In the most common case, we have to manually create this API, along with its implementation, which consists of the full set of required REST endpoints. This finicky and repetitive operation can be avoided by using quarkus-mongodb-rest-data-panache extension, whose annotation processor can automatically generate the necessary REST endpoints, from interfaces that have the following form:

public interface CustomerResource

extends PanacheMongoRepositoryResource<CustomerRepository, Customer, Long> Believe it or not: this is all you need to generate a full REST API implementation with all the endpoints needed to call the persistence service it previously generated mongodb-panache extension note processor. We are now ready to build our REST API as a Quarkus microservice. We decided to build this microservice as a Docker image, in the name quarkus-container-image-jib expansion. By simply including the following Maven dependencies:

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-container-image-jib</artifactId>

</dependency>The quarkus-maven-plugin will create a local Docker image to run our microservice. The parameters of this Docker image are defined using application.properties file as follows:

quarkus.container-image.build=true

quarkus.container-image.group=quarkus-nosql-tests

quarkus.container-image.name=docstore-mongodb

quarkus.mongodb.connection-string = mongodb://admin:admin@mongo:27017

quarkus.mongodb.database = mdb

quarkus.swagger-ui.always-include=true

quarkus.jib.jvm-entrypoint=/opt/jboss/container/java/run/run-java.shHere we define the name of the newly created Docker image as quarkus-nosql-tests/docstore-mongodb. This is parameter chaining quarkus.container-image.group and quarkus.container-image.name separate “https://dzone.com/”. Property quarkus.container-image.build having value true instructs the Quarkus plugin to associate the build operation with package phase of maven. In this way, simply executing a mvn package command, we generate a Docker image that can run our microservice. This can be tested by running it docker images command. Name of the property quarkus.jib.jvm-entrypoint defines the command that will be run by the newly generated Docker image. quarkus-run.jar is the standard Quarkus microservice startup file used when the base image ubi8/openjdk-17-runtime, as in our case. Other properties are quarkus.mongodb.connection-string and quarkus.mongodb.database = mdb which define the MongoDB database connection and database name. Last but not least, property quarkus.swagger-ui.always-include includes the Swagger UI interface in our microservices space so it allows us to easily test.

Now let’s see how to run and test the whole thing.

Launching and testing our microservices

Now that we’ve looked at the details of our implementation, let’s see how to run and test it. We decided to do it in the name docker-compose utility. It is linked here docker-compose.yml file:

version: "3.7"

services:

mongo:

image: mongo

environment:

MONGO_INITDB_ROOT_USERNAME: admin

MONGO_INITDB_ROOT_PASSWORD: admin

MONGO_INITDB_DATABASE: mdb

hostname: mongo

container_name: mongo

ports:

- "27017:27017"

volumes:

- ./mongo-init/:/docker-entrypoint-initdb.d/:ro

mongo-express:

image: mongo-express

depends_on:

- mongo

hostname: mongo-express

container_name: mongo-express

links:

- mongo:mongo

ports:

- 8081:8081

environment:

ME_CONFIG_MONGODB_ADMINUSERNAME: admin

ME_CONFIG_MONGODB_ADMINPASSWORD: admin

ME_CONFIG_MONGODB_URL: mongodb://admin:admin@mongo:27017/

docstore:

image: quarkus-nosql-tests/docstore-mongodb:1.0-SNAPSHOT

depends_on:

- mongo

- mongo-express

hostname: docstore

container_name: docstore

links:

- mongo:mongo

- mongo-express:mongo-express

ports:

- "8080:8080"

- "5005:5005"

environment:

JAVA_DEBUG: "true"

JAVA_APP_DIR: /home/jboss

JAVA_APP_JAR: quarkus-run.jarThis file refers to docker-compose a utility to run three services:

- Service called

mongostarting a Mongo DB 7 database - Service called

mongo-expressstarting the MongoDB administrative interface - Service called

docstorelaunch of our Quarkus microservice

We have to mention that it is mongo the service uses the initialization script mounted on the docker-entrypoint-initdb.d container directory. This initialization script creates a MongoDB database named mdb so it can be used by microservices.

db = db.getSiblingDB(process.env.MONGO_INITDB_ROOT_USERNAME);

db.auth(

process.env.MONGO_INITDB_ROOT_USERNAME,

process.env.MONGO_INITDB_ROOT_PASSWORD,

);

db = db.getSiblingDB(process.env.MONGO_INITDB_DATABASE);

db.createUser(

user: "nicolas",

pwd: "password1",

roles: [

role: "dbOwner",

db: "mdb"

]

);

db.createCollection("Customers");

db.createCollection("Products");

db.createCollection("Orders");

db.createCollection("OrderItems");This is the initialization JavaScript that creates a named user nicolas and a new database named mdb. The user has administrative powers over the database. Four new collections, named in order Customers, Products, Orders and OrderItemsare also created.

To test microservices, do the following:

- Clone the linked GitHub repository:

$ git clone https://github.com/nicolasduminil/docstore.git

- Go to project:

$ cd docstore

- Create a project:

$ mvn clean install

- Verify that all required Docker containers are running:

$ docker psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 7882102d404d quarkus-nosql-tests/docstore-mongodb:1.0-SNAPSHOT "/opt/jboss/containe…" 8 seconds ago Up 6 seconds 0.0.0.0:5005->5005/tcp, :::5005->5005/tcp, 0.0.0.0:8080->8080/tcp, :::8080->8080/tcp, 8443/tcp docstore 786fa4fd39d6 mongo-express "/sbin/tini -- /dock…" 8 seconds ago Up 7 seconds 0.0.0.0:8081->8081/tcp, :::8081->8081/tcp mongo-express 2e850e3233dd mongo "docker-entrypoint.s…" 9 seconds ago Up 7 seconds 0.0.0.0:27017->27017/tcp, :::27017->27017/tcp mongo

- Run integration tests:

$ mvn -DskipTests=false failsafe:integration-test

This last command will run all integration tests that should succeed. These integration tests are implemented using RESTassured library. The list below shows one of these integration tests located in the docstore-domain project:

@QuarkusIntegrationTest

@TestMethodOrder(MethodOrderer.OrderAnnotation.class)

public class CustomerResourceIT

private static Customer customer;

@BeforeAll

public static void beforeAll() throws AddressException

customer = new Customer("John", "Doe", new InternetAddress("[email protected]"));

customer.addAddress(new Address("Gebhard-Gerber-Allee 8", "Kornwestheim", "Germany"));

customer.setId(10L);

@Test

@Order(10)

public void testCreateCustomerShouldSucceed()

given()

.header("Content-type", "application/json")

.and().body(customer)

.when().post("/customer")

.then()

.statusCode(HttpStatus.SC_CREATED);

@Test

@Order(20)

public void testGetCustomerShouldSucceed()

assertThat (given()

.header("Content-type", "application/json")

.when().get("/customer")

.then()

.statusCode(HttpStatus.SC_OK)

.extract().body().jsonPath().getString("firstName[0]")).isEqualTo("John");

@Test

@Order(30)

public void testUpdateCustomerShouldSucceed()

customer.setFirstName("Jane");

given()

.header("Content-type", "application/json")

.and().body(customer)

.when().pathParam("id", customer.getId()).put("/customer/id")

.then()

.statusCode(HttpStatus.SC_NO_CONTENT);

@Test

@Order(40)

public void testGetSingleCustomerShouldSucceed()

assertThat (given()

.header("Content-type", "application/json")

.when().pathParam("id", customer.getId()).get("/customer/id")

.then()

.statusCode(HttpStatus.SC_OK)

.extract().body().jsonPath().getString("firstName")).isEqualTo("Jane");

@Test

@Order(50)

public void testDeleteCustomerShouldSucceed()

given()

.header("Content-type", "application/json")

.when().pathParam("id", customer.getId()).delete("/customer/id")

.then()

.statusCode(HttpStatus.SC_NO_CONTENT);

@Test

@Order(60)

public void testGetSingleCustomerShouldFail()

given()

.header("Content-type", "application/json")

.when().pathParam("id", customer.getId()).get("/customer/id")

.then()

.statusCode(HttpStatus.SC_NOT_FOUND);

You can also use the Swagger UI interface for testing purposes by launching your preferred browser at http://localhost:8080/q:swagger-ui. Then, to test the endpoints, you can use the payloads in the JSON files located in the src/resources/data directory docstore-api project.

You can also use the MongoDB UI administrative interface by going to http://localhost:8081 and authenticating with the default credentials (admin/pass).

You can find the source code of the project in my GitHub repository.

To enjoy!