Ferret in the wild [Pixabay/Michael Sehlmeyer]

Apple’s Ferret LLM could enable Siri to understand the layout of apps on an iPhone’s screen, potentially increasing the capabilities of Apple’s digital assistant.

Apple has been working on a number of machine learning and artificial intelligence projects that it could tease at WWDC 2024. In just-published work, it now appears that some of that work has the potential for Siri to understand what apps and iOS itself look like.

The paper, published Monday by Cornell University, is titled “Ferret-UI: Grounded Mobile UI Understanding with Multimodal LLMs.” It essentially explains a new multimodal large-scale language model (MLLM) that has potential for understanding mobile screen user interfaces.

The name Ferret originally came from an open-source multimodal LLM published in October by Cornell University researchers working with Apple colleagues. At the time, Ferret was able to detect and understand different parts of an image for complex queries, such as identifying the type of animal in a selected part of a photo.

Advancement in LLM

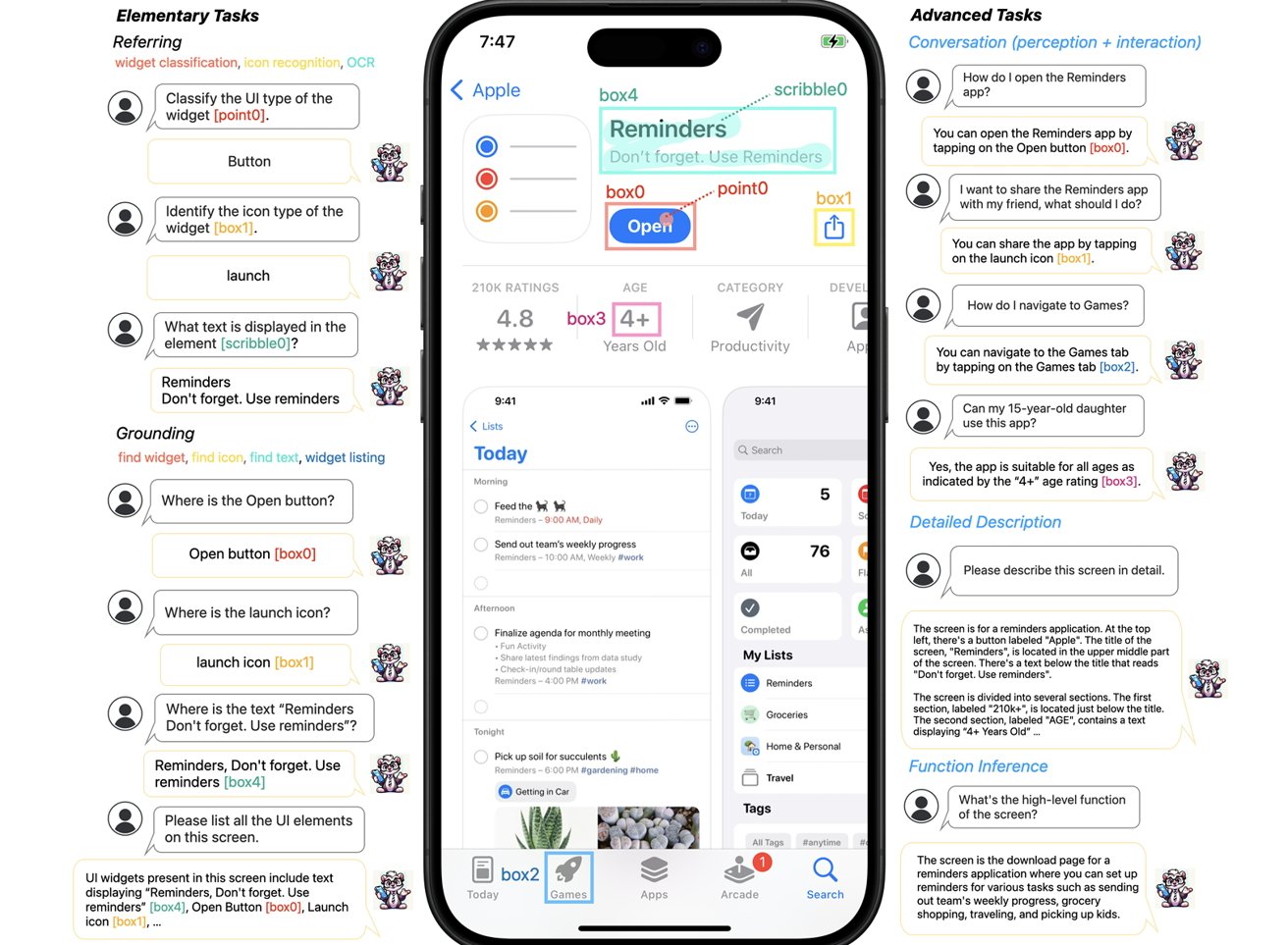

A new document for Ferret-UI explains that while there have been significant advances in the use of MLLMs, they still “lag behind in their ability to understand and efficiently interact with user interface (UI) screens.” Ferret-UI is described as a new MLLM tailored to understand mobile UI screens, complete with “referencing, grounding and inference capabilities”.

Part of the problem LLMs have in understanding the mobile screen interface is how to use it in the first place. Often in portrait orientation, this often means that icons and other details can take up a very compact portion of the screen, making it difficult for machines to understand.

To help with this, Ferret has a magnification system for scaling an image to “any resolution” to make icons and text more readable.

Example of Ferret-UI iPhone screen analysis

For processing and training, Ferret also divides the sieve into two smaller parts, cutting it in half. The paper notes that other LLMs tend to scan a lower resolution global image, which reduces the ability to adequately determine the appearance of icons.

By adding significant training data processing, it resulted in a model that can sufficiently understand user queries, understand the nature of different elements on the screen, and offer contextual responses.

For example, a user may ask how to open the Reminders app and be told to tap the Open button on the screen. Further querying whether a 15-year-old can use the app could check the age guidelines, if visible on the screen.

Assistant assistant

While we don’t know if it will be built into systems like Siri, Ferret-UI offers advanced control over a device like the iPhone. By understanding the elements of the user interface, it offers the ability for Siri to perform actions for users in applications, by selecting graphic elements within the application itself.

There are also useful applications for visually impaired people. Such an LLM might be better able to explain in detail what is on the screen and potentially perform actions for the user without the user having to do anything other than ask for it to happen.